Another sneaky WebRTC optimisation only known by Google Meet (RemoteEstimate RTCP packets)

Google Meet has consistently delivered superior quality among WebRTC applications (at least for web applications). This is especially true compared with typical open-source solutions, but it stands even for most commercial solutions.

The reason is that Google has the team that understands the media stack they have built very well and can make it behave in ways that solve their problems in the browser with knobs hidden in WebRTC that only they are aware of.

Some of us still remember how simulcast support was added to WebRTC approximately 12 years ago with SDP munging and without any documentation or note behind the x-google-conference flag, or how “Audio Network Adaptation” was added with a secret string encoding an undocumented protobuf schema to tune settings to improve audio quality.

Today I was trying to debug why a WebRTC application had a less stable bandwidth estimation than Google Meet. The scenario was quite simple: in a perfect network with plenty of bandwidth, add 50ms of extra latency suddenly. You see in all the WebRTC applications the bandwidth estimation calculated by the sending browser drops (left graph), while with Google Meet (right graph) it stays perfectly stable.

After spending hours adding logs to Chrome code and debugging the browser’s behaviour under those conditions, I ended up in the AimdRateControl class, which decides when to decrease the bandwidth estimation. For all applications (Google Meet and others), it was entering that point with a lower estimation. Surprisingly, for Google Meet, even if the estimation was lower, it would stay with the previous estimation. The reason is in this code clamping the final estimation.

new_bitrate = std::min(

current_bitrate_,

std::max(new_bitrate, network_estimate_->link_capacity_lower * beta_)

);So Chrome will never reduce the bitrate below “network_estimate_->link_capacity_lower * 0.85”. But what is network_estimate_->link_capacity_lower ?!?!

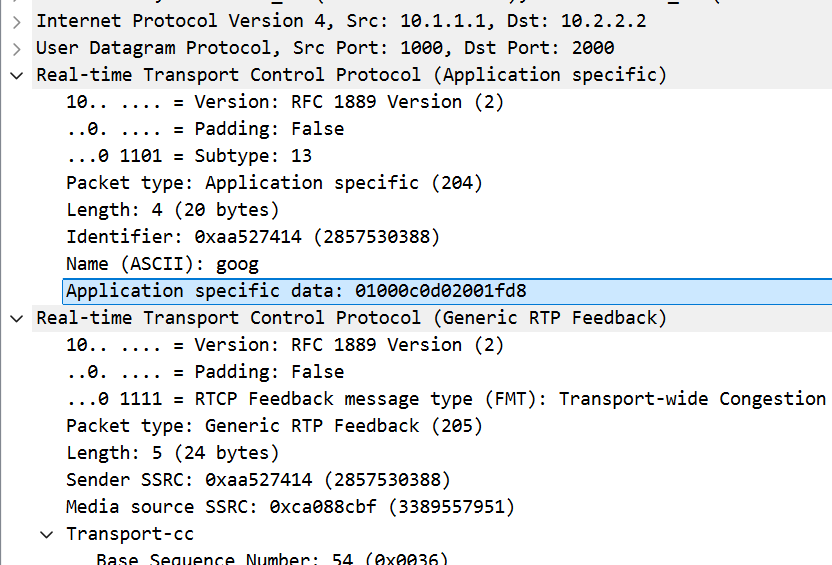

Some more debugging and I figured out that is some information sent by the server in proprietary RTCP messages of type APP with name “goog” and subtype 13.

bool RTCPReceiver::HandleApp(const rtcp::CommonHeader& rtcp_block,

PacketInformation* packet_information) {

rtcp::App app;

if (!app.Parse(rtcp_block)) {

return false;

}

if (app.name() == rtcp::RemoteEstimate::kName &&

app.sub_type() == rtcp::RemoteEstimate::kSubType) {

rtcp::RemoteEstimate estimate(std::move(app));

if (estimate.ParseData()) {

packet_information->network_state_estimate = estimate.estimate();

}

}

return true;

}This extension is described with the following words inside the codebase:

// The RemoteEstimate packet provides network estimation results from the

// receive side. This functionality is experimental and subject to change

// without notice.

This RTCP message was added back in 2019. Back then it was wired up to the SDP but even though we have looked at Google Meet frequently since then we never saw the corresponding SDP and it is still not there today.

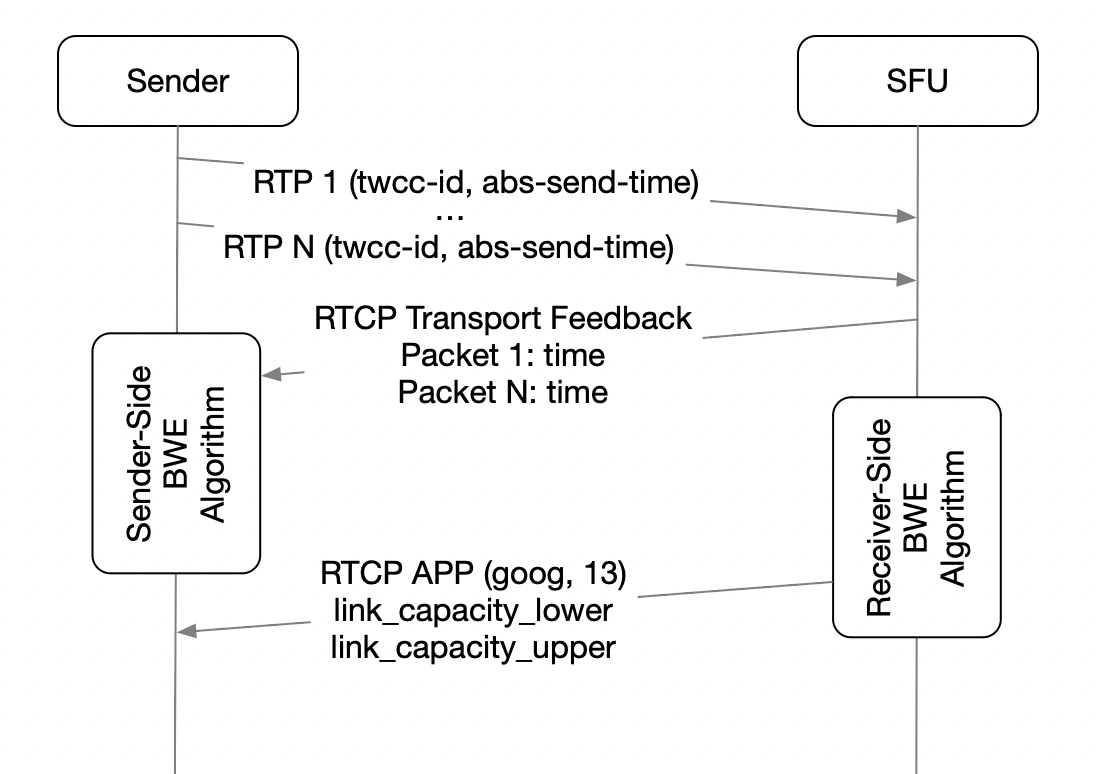

So the server is sending every 50ms a RTCP App message with a bandwidth estimation calculated from the server side.

That RTCP RemoteEstimation message includes two values encoded inside:

- link_capacity_lower

- link_capacity_upper

The lower value works as a cap to not react so fast when the sender side bandwidth estimation detects some sudden drop in the estimation but the server side estimation is still stable. The use of the upper value is disabled behind a flag in AimdRateControl but maybe it is used in other parts of WebRTC codebase.

And now the even harder question. How are those values calculated? They provide two different values and they look like different bandwidth estimations.

After sharing this with Philipp Hancke he suggested to check if it works with abs-send-time extension disabled and he was right, it doesn’t work without it so it looks similar to the old REMB implementation but providing two values (upper and lower) instead of a single one that acts as just a maximum for the sender.

In essence the reliance on abs-send-time suggests that a remote bitrate estimator is running on the server. Looking at this issue and the old emails this has quite possibly been happening since 2019, hidden in plain sight.

To verify this hypothesis, I recompiled Chrome to disregard those proprietary RTCP App messages and, there it was! Google Meet now performs as well as (or as poorly as) other implementations under the same testing network conditions.

So it looks like Google went from receiver-side bandwidth estimation (REMB; RTCP REMB could have been used to transport an upper capacity but not a lower one) to sender-side bandwidth estimation (transport feedback) to just do both :)

The necessity of the RemoteEstimate extension for quality in most scenarios is unclear. Probably is not critical given that no WebRTC application out there uses it apart from Google Meet as far as we know but it looks like it provides some value so other WebRTC applications should probably go and copy it. We only need to figure out how to calculate the lower and upper values and send them every 50ms to the sender.

Thank you very much to Philipp Hancke for his review and feedback.

You can follow me in Twitter if you are interested in Real Time Communications or want to comment about the topic of this psot.

Comments

Post a Comment