OpenAI WebRTC API Review

There is a new interface added to OpenAI RealTime models. Now it supports WebRTC!

Given the people working on it I'm sure it has to be great so as usual let’s take a look and see what is under the hood in terms of audio transmission.

Signalling or Establishment of the connection

There are two options for the establishment of a RealTime session with the OpenAI servers:

- WebSocket signalling: much nicer API without ugly SDPs involved but less suited for public networks.

- HTTP/WebRTC signalling: has an uglier API including SDP offer/answer negotiations but can work well in real networks that is critical for most of the use cases.

In the rest of the post we will focus only in the later (HTTP/WebRTC) that is the most interesting one.

Authentication

The first step to use these RealTime APIs sending audio data directly from clients to OpenAI servers is to obtain an ephemeral key using you OpenAI API Secret. This is a simple HTTP request that for testing you can do from the command line:

`curl -X POST -H “Content-Type: application/json” -H “Authorization: Bearer OPENAI_API_SECRET” -d ‘{“model”: “gpt-4o-realtime-preview-2024–12–17”, “voice”: “verse”}’ https://api.openai.com/v1/realtime/sessions`

One interesting thing is that those credentials retrieved are really short lived and valid only for 60 seconds.

The response of that API also include things like “audio_format”: “pcm16”, that look outdated as the API also support "opus" now with the new WebRTC API and some parameters about voice and silence detection that i'm not sure if they include some way to tune them by developers.

Connectivity

The WebRTC connection is established using UDP and only UDP. There are no TURN servers or ICE-TCP/SSLTCP candidates so don’t expect this connection to work in restrictive corporate networks.

This could be ok as you can fallback to websockets API in those cases, although in my opinion OpenAI should provide an SDK doing that fallback or just include iceServers or TCP candidates in the Answer.

All the examples also ignore the fact that the connection can get disconnected at some point and you will need to create it again. That’s another small feature that could be hidden in a future OpenAI SDK if needed.

As an interesting fact the UDP traffic uses the old trick of reusing the port 3478 to try to pass some firewalls that have STUN traffic open. Also in my case I’m in Europe and the WebRTC server assigned to me is in the US but I guess that will change once this API is out of Beta.

Audio transmission

As expected with a WebRTC the audio transmission is done using Opus Codec at 50 packets per second. PCM and G722 are also negotiated so probably they also work although I don't see a reason to include them.

The whole SDP Answer from the Server can be seen in the following snippet:

v=- 5732503706549353267 1734545489 IN IP4 127.0.0.1

s=-

t=0 0

a=group:BUNDLE 0 1

a=extmap-allow-mixed

a=msid-semantic: WMS realtimeapi

m=audio 3478 UDP/TLS/RTP/SAVPF 111 9 0 8

c=IN IP4 13.65.63.209

a=rtcp:3478 IN IP4 13.65.63.209

a=candidate:3461111247 1 udp 2130706431 13.65.63.209 3478 typ host generation 0

a=candidate:3461111247 2 udp 2130706431 13.65.63.209 3478 typ host generation 0

a=ice-ufrag:WGbWWofbaCZKJNIq

a=ice-pwd:SLqkjRNAwXPdKZsRmdlukcvcoPqUEsPw

a=fingerprint:sha-256 D7:A3:67:D9:49:5E:81:C5:A8:41:15:C1:A4:7A:FC:6F:51:8D:6F:6F:81:C2:A3:EB:BC:65:C0:B2:8A:26:4E:A7

a=setup:active

a=mid:0

a=extmap:3 <http://www.ietf.org/id/draft-holmer-rmcat-transport-wide-cc-extensions-01>

a=sendrecv

a=msid:realtimeapi audio

a=rtcp-mux

a=rtcp-rsize

a=rtpmap:111 opus/48000/2

a=rtcp-fb:111 transport-cc

a=fmtp:111 minptime=10;useinbandfec=1

a=rtpmap:9 G722/8000

a=rtpmap:0 PCMU/8000

a=rtpmap:8 PCMA/8000

a=ssrc:2472058689 cname:realtimeapi

a=ssrc:2472058689 msid:realtimeapi audio

m=application 9 UDP/DTLS/SCTP webrtc-datachannel

c=IN IP4 0.0.0.0

a=ice-ufrag:WGbWWofbaCZKJNIq

a=ice-pwd:SLqkjRNAwXPdKZsRmdlukcvcoPqUEsPw

a=fingerprint:sha-256 D7:A3:67:D9:49:5E:81:C5:A8:41:15:C1:A4:7A:FC:6F:51:8D:6F:6F:81:C2:A3:EB:BC:65:C0:B2:8A:26:4E:A7

a=setup:active

a=mid:1

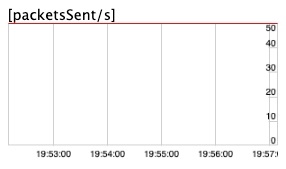

a=sctp-port:5000I was expecting discontinuous transmission enabled for audio to reduce the bandwidth usage given that the user is probably in silence most of the time but I guess the API is not yet optimised. You can see how it sends and receives 50 packets per second all the time:

The other option I would consider is reducing the uplink audio bitrate given that llm probably doesn’t need an amazing audio quality to understand the content and the emotions associated with it.

There is also transport-cc RTP extension negotiated that doesn’t have much use in this audio-only use case and probably could be eliminated to remove some tiny overhead in the transmission.

In terms of audio reliability, opus inbandfec is the only addition. There is no RED or Retransmissions. This is probably fine for this specific use case given that LLM should be able to infer missing parts of the sentences by the context. Still I don't see any reason to not include at least retransmissions.

Data

There is a reliable datachannel established to receive events from the server side. This is a great feature and provides a lot of flexibility as well as a channel to control things dynamically (like the voice used) without having to recreate the connection.

As soon as you start sending and receiving audio there are a lot of events received. Some of the most interesting ones could be:

- input_audio_buffer.speech_started: Notifies when the user starts

- speaking input_audio_buffer.speech_stopped: Notifies when the user

- starts speaking response.audio_transcript.delta: Includes the deltas of the text that you are receiving also by audio.

It is interesting how (as expected) you receive the text much faster than the audio. That makes me wondering if for some use cases reading the text is not a more effective “conversation” than hearing the voice.

Conclusions

It is great to see new products adopting WebRTC and providing real time interfaces. The functionality looks great for a Beta version and I’m sure it will evolve quickly.

I’m still not convinced Opus and WebRTC are the best candidates for this specific type of use case where you are talking to a “machine” instead of "having a meeting" and I hope in the future we end up using more custom codecs and transports tuned for this use case but I could be wrong, and in any case this is a very simple and a great approach for the time being.

Comments

Post a Comment