Target Bitrates vs Max Bitrates

Not all the simulcast layers have the same encoding quality

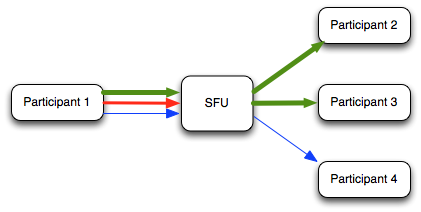

When using simulcast video encoding with WebRTC, the encoder generates different versions or layers of the video input with varying resolutions. Using this techniques a multiparty video server (SFU) can adapt the video that each participant in a room receives based on factors such as available bandwidth, CPU/battery level, or the rendering size of those videos in each receiver.

These different versions of the video have varying resolutions, but what about their encoding quality? For example, if a user is receiving a video and rendering it in a window of 640x360, would he get the same quality if he receives the 640x360 layer as if he receives the highest layer of 1280x720?

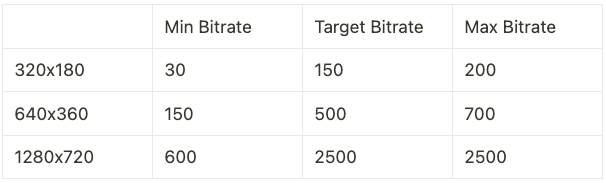

To answer this question about the quality of each resolution, we can examine first the bitrates used by each. But the interesting thing is that the bitrate of each resolution is not always the same and this is where we need to distinguish between min bitrate, target bitrate and max bitrate. Each resolution has those three parameters associated with it:

- Min bitrate is the bitrate needed to be available to enable this layer.

- Target bitrate is the bitrate used in ideal conditions when there are other higher layers enabled.

- Max bitrate is the bitrate used in ideal conditions when this is the highest layer enabled.

For example, here are the values configured for different simulcast layers in VP8 (you can check them for other layers and codecs at simulcast.cc):

You can see how these things work with a quick test enabling and disabling different simulcast layers (in this case I used the simulcast-playground). See in the graphs the bitrates of each layer in different conditions. On the top is the lowest resolution layer, then the middle layer and in the bottom is the highest resolution layer.

- Initially all the layers are enabled and they are sent: 320x180 (150kbps), 640x360 (500kbps), 1280x720 (2500kbps)

- When the highest layer is disabled, part of that bitrate is used for the middle layer and they are sent as: 320x180 (150kbps), 640x360 (700kbps)

- When the middle layer is also disabled only the lowest is sent as: 320x180 (200kbps)

Is this good or bad? 🙂

For the original purpose of simulcast — adapting video received to different receivers with varying bandwidth availability — this works well, since lower quality is expected when bandwidth is limited.

For other purposes, the results vary. With default bitrate settings, forwarding a lower layer means participants receive both lower resolution and reduced quality.

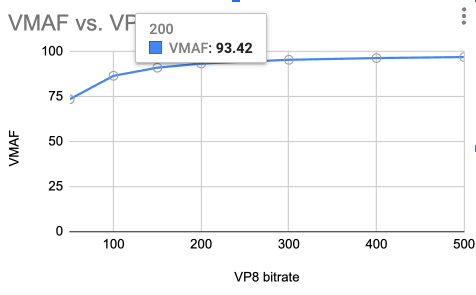

To measure the quality impact, I tested VMAF scores by encoding videos with target and max bitrates using ffmpeg’s real-time encoding settings. While this method differs slightly from WebRTC’s configuration, it provides a reasonable comparison.

Looking at the lowest resolution (320x180), we find good quality above 100kbps, with notably better results at the max bitrate of 200kbps compared to the target bitrate of 150kbps (a difference of 2.5 VMAF points).

This quality degradation affects both simulcast and SVC configurations. SVC faces an additional challenge: the bitrates for different resolutions are hardcoded in WebRTC and cannot be modified using the setParameters API.

In summary, a layer’s bitrate varies depending on whether higher layers are enabled. While requesting lower resolutions works well in most cases, remember that lower layers have both reduced resolution and lower encoding quality.

Comments

Post a Comment